Many people that I follow on Twitter joined the AWS Community Builders program. This program is very attractive, and for me, a significant point is the mentorship program that would put you in contact with so many bright people. If you join this program, you should be active in two sectors. I would choose Machine Learning after, of course, Serverless.

I am not an expert on Machine Learning, but I am fascinated by AI. What a better idea to learn something new like the first day at work.

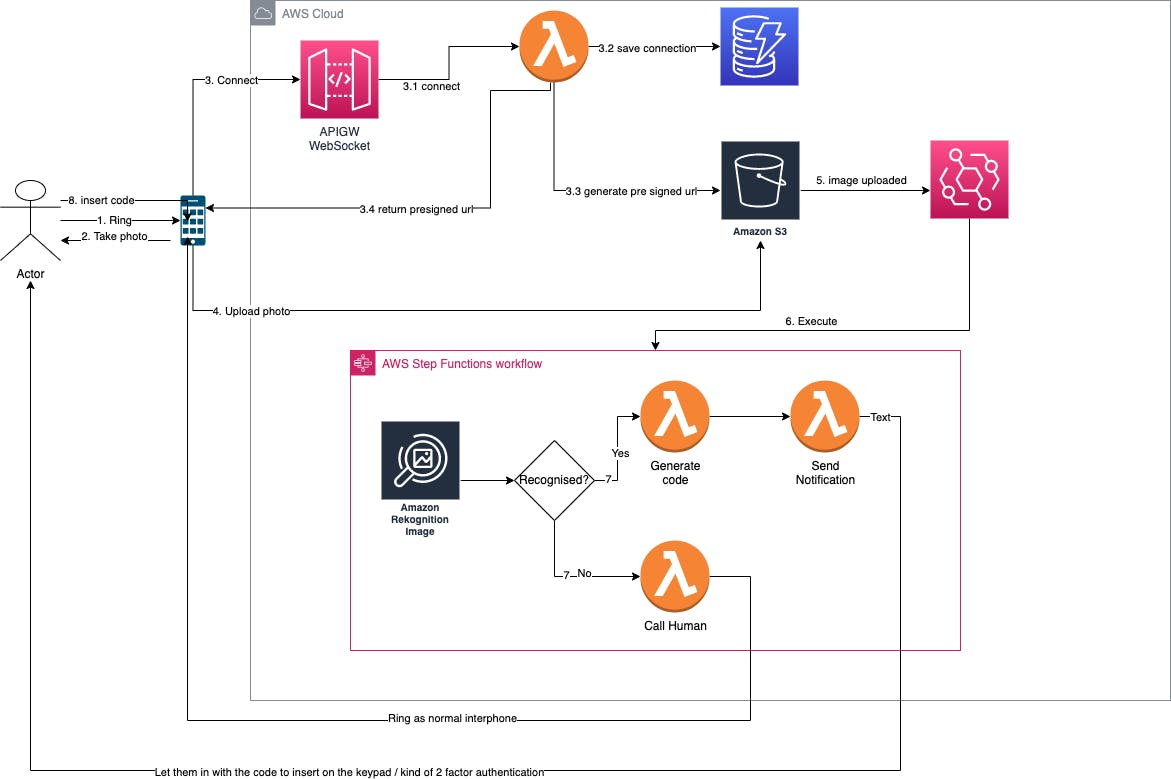

Project doorbell

I will build a super tiny version of Amazon Ring. Writing this makes me laugh, but it is an idea and an excuse to build something.

In this blog post, I will prove the part of Amazon Rekognition because the rest are standard serverless topics and, I will dedicate future posts to it. Maybe with some code written in Rust (still, I did not decide).

Requirement

- I ring my doorbell.

- The doorbell takes a picture of me.

- Will open a WebSocket connection and get back a pre-signed URL.

- Upload the photo to S3.

- Send the event to EventBridge and execute the Step Function.

- Compare my face with the uploaded photo.

- If it is me, send me a code to my phone (2FA). Otherwise, call a human.

- I insert the code, and if it matches, I can go in.

It is fun, with many little things to play with, and I think I will do a post for each point.

Amazon Rekognition

It is one of many AWS AI services and can easily be integrated with your application. For example, you provide an image or video to the Amazon Rekognition API, and the service can identify objects, people, text, scenes, and activities. There are many uses cases and many API to use.

For this little project, I am interested in:

- Face-based user verification – Amazon Rekognition enables your applications to confirm user identities by comparing their live image with a reference image.

- CompareFaces - Compares a face in the target image with a source.

There are two types of API

- Non-storage operations

- Storage operations

CompareFaces is part of the Non-storage API images operations where no information is stored, while as the opposite the "Storage operations" certain facial information is stored by Amazon Rekognition.

This is the request that we should use to compare the face.

{

"SimilarityThreshold": number, // The minimum level of confidence in the face matches that a match must meet

"SourceImage": {

"S3Object": {

"Bucket": "string",

"Name": "string"

}

},

"TargetImage": {

"S3Object": {

"Bucket": "string",

"Name": "string"

}

}

}

This request will use NONE as the default value of the QualityFilter property. The AWS Developer Guide reports a filter that specifies a quality bar for how much filtering is done to identify faces. Filtered faces aren't compared. If you select AUTO, Amazon Rekognition chooses the quality bar. If you specify LOW, MEDIUM, or HIGH, filtering removes all faces that don't meet the quality selected. The quality bar is based on a variety of everyday use cases. Low-quality detections can occur for several reasons. Some examples are an object that's misidentified as a face, a face that's too blurry, or a face with a pose that's too extreme to use. If you specify NONE, no filtering is performed. The default value is NONE.

If the request succeeds, we will have a very long response, and for my use case, I am interested only in the Confidence level.

{

"output": {

"FaceMatches": [

{

"Face": {

"BoundingBox": {....},

"Confidence": 99.998924,

"Landmarks": [...],

"Pose": {... },

"Quality": {...}

},

"Similarity": 99.9963

}

],

"SourceImageFace": {....},

"outputDetails": {...}

}

Each match provides the confidence level and the similarity score for the face and part of the face like eyes, nose.

Amazon Rekognition to the test

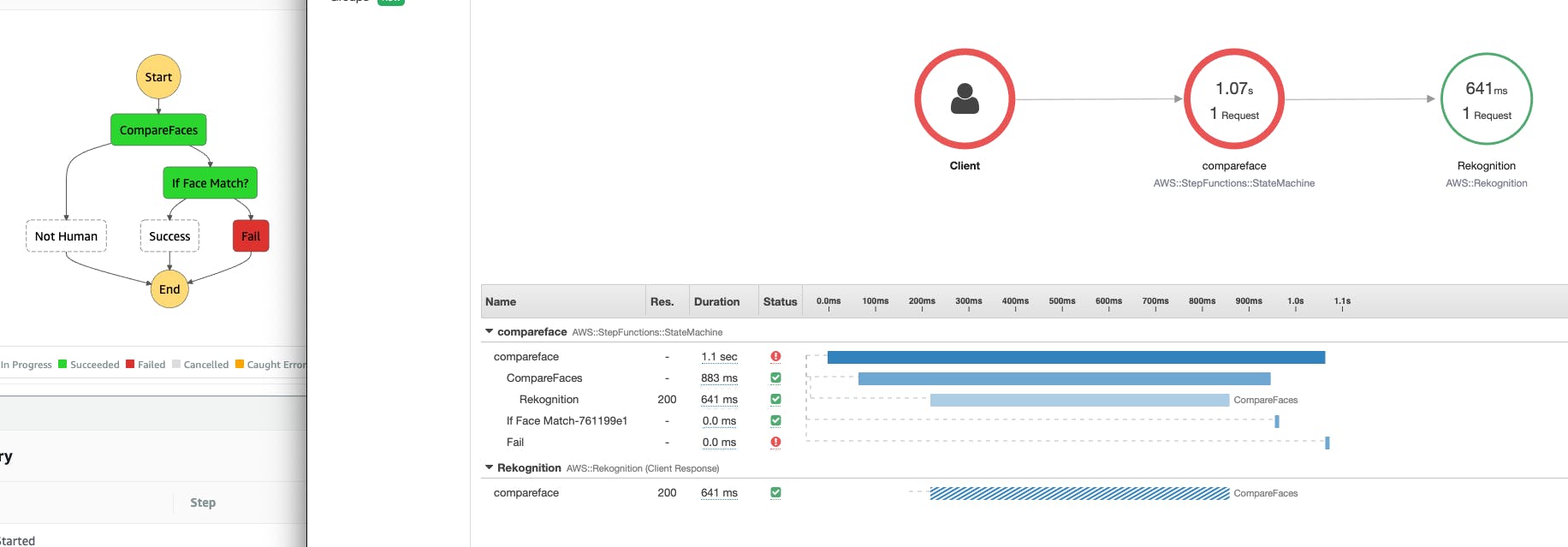

AWS Step Function is used to orchestrate multiple AWS services and comes with many benefits and built-in features like try/catch that we are using in this example.

{

"Comment": "A description of my state machine",

"StartAt": "Pass",

"States": {

"Pass": {

"Type": "Pass",

"Next": "CompareFaces",

"Parameters": {

"bucketName.$": "$.bucket.name",

"input.$": "$.object.key"

}

},

"CompareFaces": {

"Type": "Task",

"Parameters": {

"SimilarityThreshold": 70,

"SourceImage": {

"S3Object": {

"Bucket.$": "$.bucketName",

"Name": "source.jpeg"

}

},

"TargetImage": {

"S3Object": {

"Bucket.$": "$.bucketName",

"Name.$": "$.input"

}

}

},

"Resource": "arn:aws:states:::aws-sdk:rekognition:compareFaces",

"Next": "If Face Match?",

"Catch": [

{

"ErrorEquals": [

"Rekognition.InvalidParameterException"

],

"Next": "Not Human"

}

]

},

"Not Human": {

"Type": "Fail"

},

"If Face Match?": {

"Type": "Choice",

"Choices": [

{

"And": [

{

"Variable": "$.FaceMatches[0]",

"IsPresent": true

},

{

"Variable": "$.FaceMatches[0].Face.Confidence",

"NumericGreaterThan": 70

}

],

"Next": "Success"

}

],

"Default": "Fail"

},

"Success": {

"Type": "Succeed"

},

"Fail": {

"Type": "Fail"

}

}

}

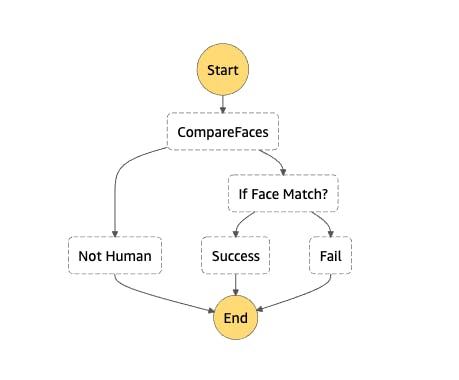

Using the definition above, the result should look like

Creating the AWS Step Function manually from the console will require adding the following permission.

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::bucketNAME/*"

"rekognition:CompareFaces",

Testing the Application

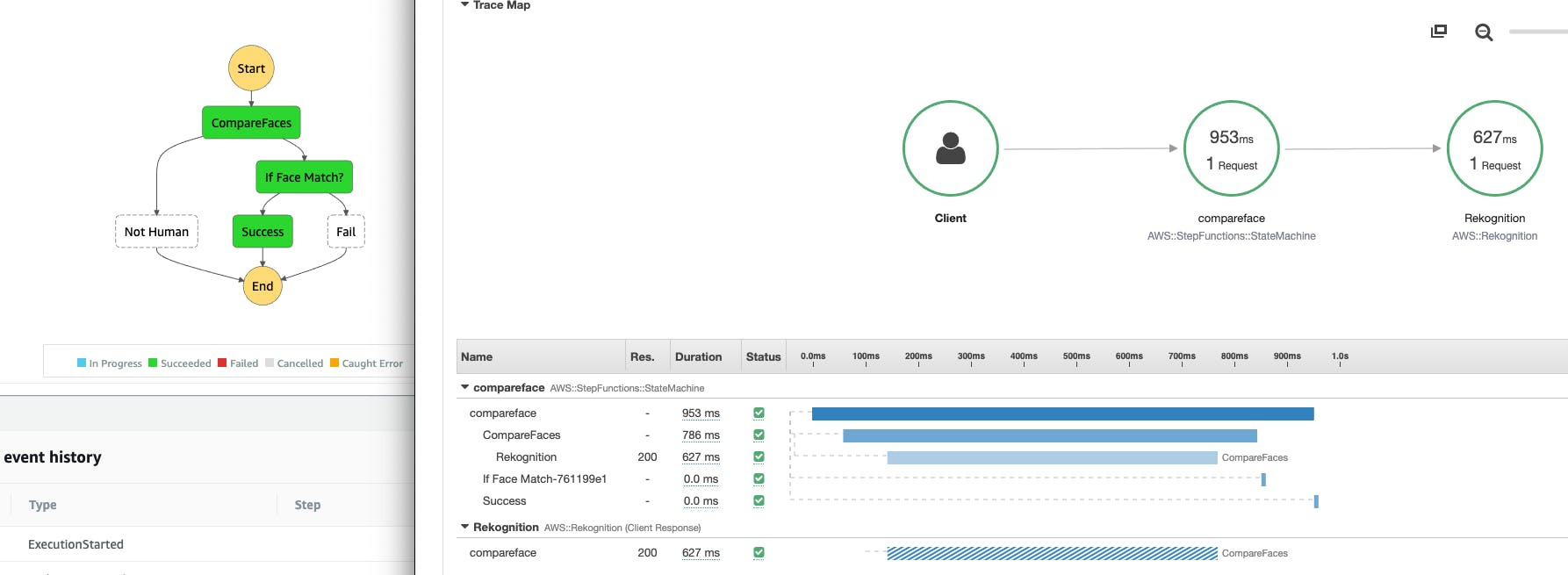

Me

The Step Function succeeds, and we will get a code to open the door.

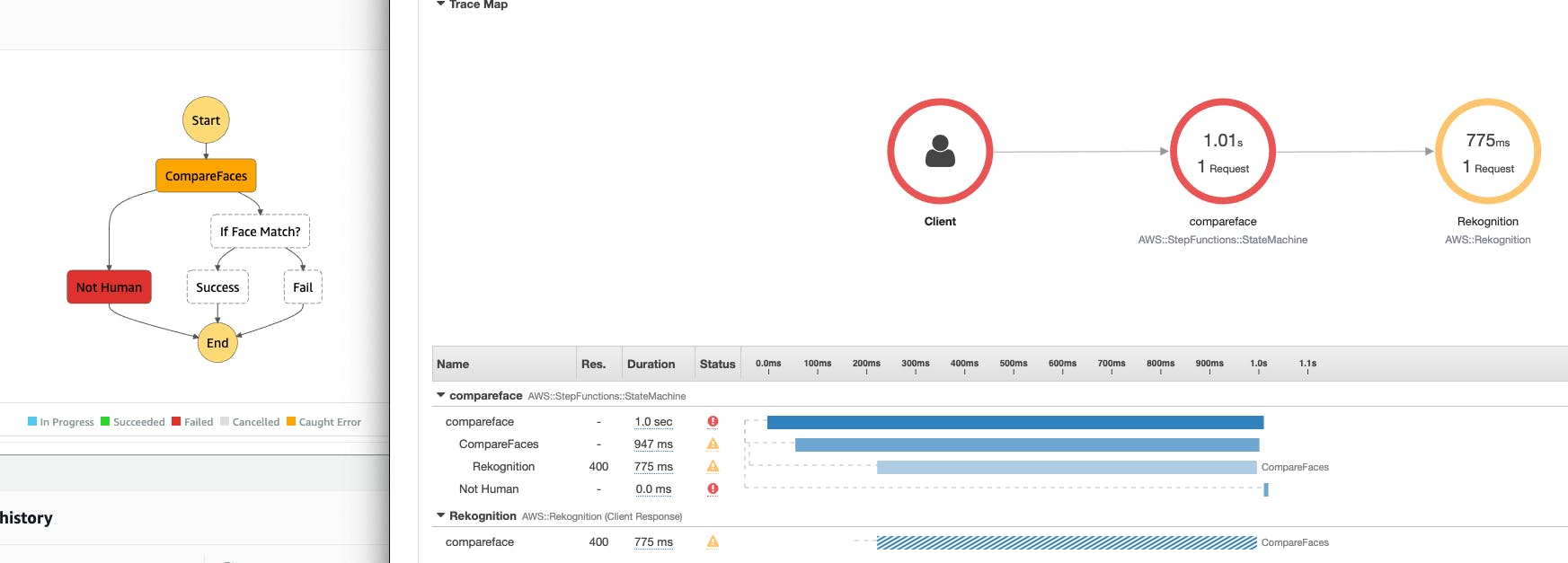

A cat

Amazon Rekognition will fail with

{

"name": "CompareFaces",

"output": {

"Error": "Rekognition.InvalidParameterException",

"Cause": "Request has invalid parameters (Service: Rekognition, Status Code: 400, Request ID: e3c28532-35a7-4922-9576-34b646e31bb6, Extended Request ID: null)"

},

"outputDetails": {

"truncated": false

}

}

We catch the error, and in this case, we go to the step "Not Human".

Another person

we get:

Group of people

I cannot show you the image of a group of 4 (me included) because my friends did not permit me to put their faces here.

But the response would be

{

"FaceMatches": [

{

"Face": {

"BoundingBox": {...},

"Confidence": 99.970726,

"Landmarks": [...],

"Pose": {...},

"Quality": {...}

},

"Similarity": 99.99982

}

],

"SourceImageFace": {...},

"UnmatchedFaces": [

{

"BoundingBox": {

....

},

"BoundingBox": {

....

},

"BoundingBox": {

....

}

]

}

Conclusion

Because I do not have any experience with AI, I expected some more trouble. But, instead, it is straightforward, and the documentation is clear allow me to find the correct API immediately. Amazon Rekognition has a free tier period of 12 months, and you can compare 5000 images per month. After that, the price is like $0.0012 per image so if you compare for a million times is like $1.2K.

A family of 4 that use the interphone plus Amazon Prime deliveries 5 times a day at your door every day of the year and we some round-up we can say 5000 rings per year will cost $6 plus all the rest of the serverless services.