Table of contents

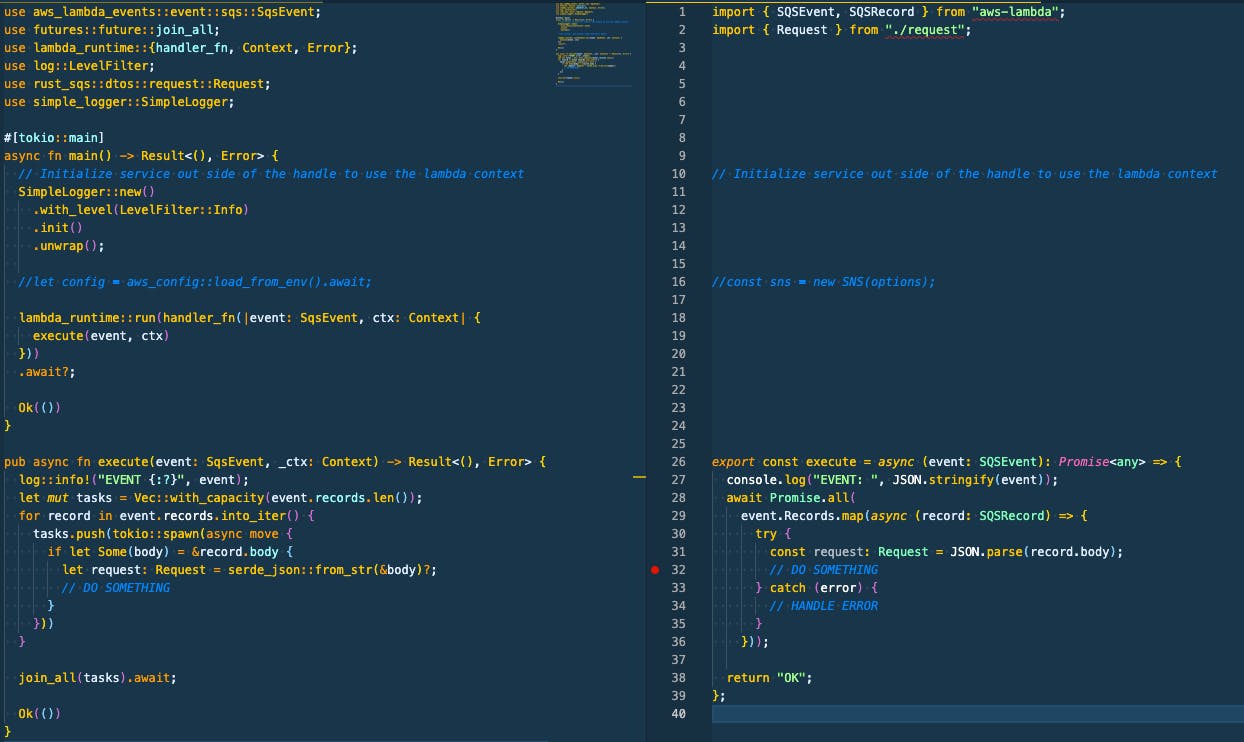

This blog post is the first mini-series of code comparisons between Node.js and Rust, and I am starting with Amazon SQS. It does not show how fast Rust is or explain how Rust works, but it is a side-by-side code comparison to facilitate your journey that you already took to learn Rust.

Why

In the last few years, I have switched languages multiple times between .NET, JavaScript and Rust, and the knowledge acquired with one language is transferable to a new one. Therefore, we need mentally map the similarity to pick it quickly.

Because I was doing this with a friend who was curious to see Serverless Rust in action, I wrote small posts about it.

The Basic

Rust is coming with many built-in features, for example:

| JS | Rust |

| npm | cargo |

| npm init | cargo init |

| npm install | cargo install |

| npm run build | cargo build |

| package. json | Cargo.toml |

| package-lock. json | Cargo.lock |

| webpack | cargo build |

| lint | cargo clippy |

| prettier | cargo fmt |

| doc generation | cargo doc |

| test library like jest | cargo test |

Generate a new SAM based Serverless App

sam init --location gh:aws-samples/cookiecutter-aws-sam-rust

Amazon SQS

The official example from AWS Rust team is here.

Rust has more lines of code, but this is because everything is typed, so you must import everything.

After the imports, we have the main() method.

#[tokio::main]

async fn main() -> Result<(), Error> {

// Initialize service out side of the handle to use the lambda context

SimpleLogger::new()

.with_level(LevelFilter::Info)

.init()

.unwrap();

//let config = aws_config::load_from_env().await;

lambda_runtime::run(handler_fn(|event: SqsEvent, ctx: Context| {

execute(event, ctx)

}))

.await?;

Ok(())

}

When the Lambda service calls your function, an execution environment is created. As your lambda function can be invoked multiple times, the execution context is maintained for some time in anticipation of another Lambda function invocation. When that happens, it can "reuse" the context, and the best practice is to use it to initialize your classes, SDK clients and database connections outside. This saves execution time and cost for subsequent invocations (Warm start).

I used an external library for logging in the example, but println!(...) is the same as console.log(....).

Finally, the code that we are interested in:

pub async fn execute(event: SqsEvent, _ctx: Context) -> Result<(), Error> {

log::info!("EVENT {:?}", event);

let mut tasks = Vec::with_capacity(event.records.len());

for record in event.records.into_iter() {

tasks.push(tokio::spawn(async move {

if let Some(body) = &record.body {

let request: Request = serde_json::from_str(&body)?;

// DO SOMETHING

}

}))

}

join_all(tasks).await;

Ok(())

}

It is pretty much the same, just syntax. The Promise.all(...) is achievable with the crate futures.

If you are wondering about the following:

let mut tasks = Vec::with_capacity(event.records.len());

Please read Capacity and Reallocation explanation.

If, like my friend, you are thinking, well, the code is not the same, I can rewrite the Javascript part in this way:

export const execute = async (event: SQSEvent): Promise<any> => {

console.log("EVENT: ", JSON.stringify(event));

const promises = [];

event.Records.map(async (record: SQSRecord) => {

const request: Request = JSON.parse(record.body);

promises.push(do_something());

});

await Promise.all(promises);

return "OK";

};

Conclusion

On my GitHub profile, you can find a few examples related or not to code comparison.

For example:

It is well known that Rust is one the faster runtime for Serverless applications, and we are not here to discuss it. Instead, this series will compare parts of the code used in typical serverless applications to show that your skill sets can be reused no matter the language apart from the syntax that you can learn. Having the same code written in different languages helps me move quickly to Rust, and I hope it will help you facilitate the migration to Rust.